CoTools and the Future of LLM Tool Use for Complex Reasoning

Imagine asking an AI, “What’s the best route to work tomorrow morning?” and receiving a response that not only understands your intent but actually checks live traffic data via an external API and gives you the optimal route.

This isn’t a future concept. It’s happening right now—with Chain-of-Tools (CoTools), a framework designed for effective LLM tool use.

CoTools is an innovative framework that expands the capabilities of large language models (LLMs), allowing them to interact with external tools like APIs, databases, or calculators—all without retraining or compromising their core reasoning ability. It enables LLMs to reason and act, marking a shift from static text prediction to dynamic decision-making and tool-augmented AI.

In this blog, we’ll break down how the Chain-of-Tools framework works, why LLM tool integration matters, and how it’s changing the game for real-world AI applications.

Why do LLMs need external tool access?

Large language models are incredibly capable of understanding and generating text, but they inherently face LLM limitations with tasks that require:

- Real-time data access (e.g., live weather forecasts, breaking news, current traffic conditions)

- Precise numerical calculations (beyond basic arithmetic)

- Interaction with external tools or API integration

For instance, ask a standard LLM, “What’s the weather in Tokyo tomorrow?”—you’ll likely get a prediction based on its training data, not a live forecast derived from an external source of truth.

While previous workarounds like fine-tuning or in-context learning offer partial solutions for LLM tool use, they fall short at scale, especially when dealing with many or unseen tools:

| Feature | Fine-Tuning | In-Context Learning | CoTools (Chain-of-Tools) |

|---|---|---|---|

| Flexibility with Tools | ❌ Limited | ✅ High | ✅ High |

| Efficiency | ✅ High | ❌ Low | ✅ High |

| Use of Unseen Tools | ❌ No | ✅ Yes | ✅ Yes |

| Preserved LLM Reasoning | ❌ Can be affected | ✅ Yes | ✅ Yes |

CoTools presents a scalable, modular alternative that unlocks an LLM's ability to use tools independently—without modifying the base frozen LLM.

What is Chain-of-Tools (CoTools)?

CoTools (Chain-of-Tools) is a framework enabling frozen LLMs to interact with external tools dynamically during inference. The model doesn’t need retraining; it learns when to use a tool, which tool to select from potentially vast libraries, and how to call it based on natural language tool descriptions.

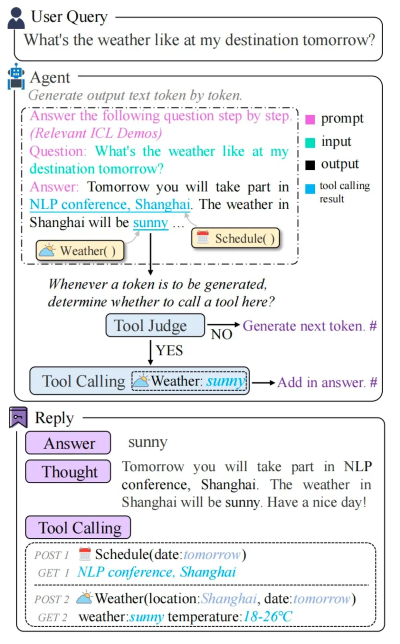

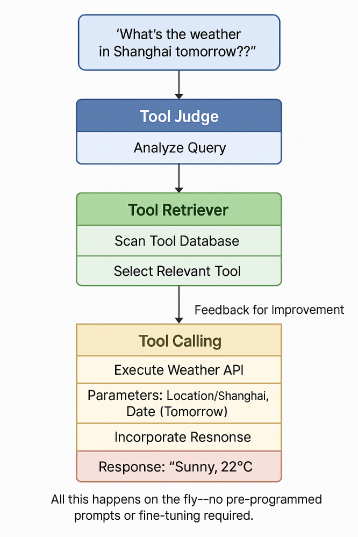

The CoTools pipeline: How LLMs select and use tools

- Tool Judge: Determines if tool usage is necessary at any given point in generating the response.

- Tool Retriever: Selects the most relevant external tool based on the query context and the LLM's current reasoning path (tool selection).

- Tool Calling: Executes the chosen tool with appropriate parameters and integrates the result back into the LLM's generation process.

This entire tool interaction process happens dynamically, outside the core LLM, preserving its general reasoning capabilities.

How CoTools works – A simple example

Let’s trace a real-world query demonstrating dynamic tool interaction:

User: "What’s the weather in Shanghai tomorrow?"*

- The Tool Judge detects the need for external, real-time information.

- The Tool Retriever identifies a relevant weather API from its available tools based on the request.

- Tool Calling executes the weather API with parameters like

city=Shanghaianddate=tomorrow. - The API output (e.g., “Sunny, 22°C”) is seamlessly integrated into the LLM's final response.

This interaction happens inline during generation, aiming for minimal latency and reducing complex prompt engineering for tool usage.

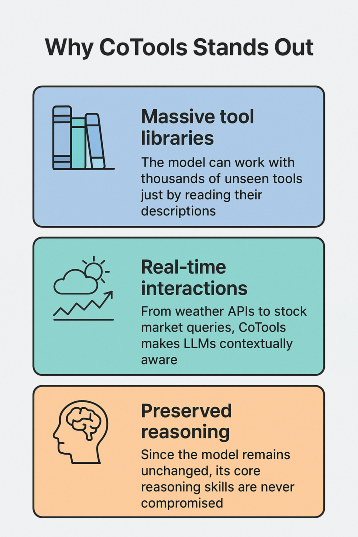

Why Chain-of-Tools (CoTools) stands out for LLM tool use

CoTools excels where traditional LLM tool integration methods often struggle:

- Massive tool libraries: Enables LLMs to work effectively with thousands of unseen tools, interpreting their function solely from natural language descriptions.

- Real-time responsiveness: Adds a layer of real-world awareness by allowing LLMs to access live data from weather, finance, search APIs, and more.

- Preserved LLM intelligence: Because the LLM remains frozen (not fine-tuned for tools), its fundamental reasoning and language generation skills are kept intact.

CoTools vs. OpenAI tool calling (function calling)

At first glance, CoTools might resemble OpenAI’s function calling (often referred to as OpenAI tool calling) capabilities in models like ChatGPT. However, the differences are significant, particularly for developers building independent, model-agnostic, or open-source AI systems.

| Feature | OpenAI Tool Calling | CoTools (Chain-of-Tools) |

|---|---|---|

| Model Dependency | Requires OpenAI infrastructure | Model-agnostic (works with any frozen LLM) |

| Tool Integration | Structured function schemas | Natural language tool descriptions |

| Scalability (Tool Number) | Limited by predefined schemas | Scales to 1,000s of diverse tools |

| Flexibility | Excellent for defined tools | Ideal for dynamic & unseen tools |

| Open-Source Ready | ❌ Proprietary | ✅ Fully open & modular |

In summary, OpenAI's tool calling is powerful for integrating pre-defined functions within its ecosystem. CoTools, however, offers greater flexibility, scalability, and model independence, making it highly suitable for research, customization, and building enterprise-grade AI systems in open environments.

Real-world applications of CoTools & Tool-Augmented LLMs

Here are just a few practical use cases where Chain-of-Tools empowers AI systems:

- Enhanced numerical reasoning: LLMs can offload complex calculations to a dedicated math tool (like a calculator API), ensuring accurate numerical results, even for multi-step problems.

Answer: [CoTools calls calculator tool] → 529.

- Accurate knowledge-based querying: Facilitates LLMs accessing live data via external APIs for up-to-date, factual information retrieval.

Answer: [CoTools calls flight API] → Provides best time and fare data.

- Complex multimodal tasks: Enables a combination of text, image, audio, or video generation/analysis by orchestrating different multimodal AI tools.

Answer: CoTools uses text generation + mapping API + image generation tools to build a complete guide.

Experimental results: Validating CoTools performance

Chain-of-Tools (CoTools) has been rigorously evaluated across multiple benchmarks to assess its effectiveness in enhancing the reasoning capabilities of frozen Large Language Models (LLMs):

- GSM8K-XL: This benchmark focuses on complex numerical reasoning tasks. CoTools achieved a performance score of 0.19, surpassing the baseline ToolkenGPT's score of 0.18.

- FuncQA: Designed to test functional question answering, CoTools attained a score of 0.53 in one-hop questions, compared to ToolkenGPT's 0.48.

- KAMEL: A knowledge-based question-answering benchmark, where CoTools demonstrated an accuracy of 93.8% using supervised data, slightly outperforming ToolkenGPT's 93.4%.

To further evaluate CoTools' adaptability with previously unseen tools, the SimpleToolQuestions (STQuestions) dataset was introduced, encompassing over 1,800 tools. In this challenging scenario, CoTools achieved a top-1 accuracy of 10.4% and a top-5 accuracy of 33.7% on unseen tools, significantly outperforming ToolkenGPT, which recorded 0% in top-1 accuracy.

What’s Next for Tool-Augmented AI and LLM Tool Use?

Chain-of-Tools (CoTools) represents a major advancement towards creating more intelligent, context-aware AI agents that can:

- Automate complex AI workflows.

- Pull and reason over live data from diverse sources.

- Adapt to new environments and external tools dynamically.

- Collaborate effectively within diverse AI toolchains.

Its open, model-agnostic, and modular architecture makes CoTools a compelling framework for AI researchers, startups, and enterprises looking to build the next generation of tool-augmented AI.

Our Thoughts on Chain-of-Tools

Large language models have made incredible progress, but their true potential is unlocked when they can collaborate—not just with humans but with a vast ecosystem of external tools.

CoTools effectively bridges this gap, enabling LLMs that don’t just think—they act by leveraging real-world data and functionalities. It offers a scalable, efficient, and crucial model-agnostic approach to LLM tool integration.

Whether you’re building the next-gen AI assistant, enhancing enterprise AI workflows, or pushing the boundaries of AI research, Chain-of-Tools provides a powerful glimpse into the future of autonomous, tool-augmented AI. Go check out the research and explore its potential!

References

- Primary Research Paper:

- Chain-of-Tools: Utilizing Massive Unseen Tools in the CoT Reasoning of Frozen Language Models

- Related Blog Post:

- Chain-of-Tools: Unleashing Frozen LLMs on a Universe of Unseen Tools