Building Robust Evaluation Workflows for AI Agents

Through the first two blogs (Part 1 and Part 2) of the AI agent evaluation series, we explored AI agents and the key performance metrics for evaluating them. Now, we focus on building end-to-end evaluation workflows. A structured AI evaluation process encompassing both pre-release and post-release phases is crucial for developing robust and reliable agents. This blog discusses best practices for systematically evaluating agents, including simulation-based pre-release testing, real-world post-release monitoring, and continuous refinement strategies for building effective agentic systems.

Pre-Release Evaluations (aka Offline Evaluations)

Before an AI agent is deployed, comprehensive pre-release testing is required to validate its functionality, adaptability, and performance across various scenarios. This phase of pre-release evaluation mitigates risks, minimizes failures, and enhances user trust in the system.

Using Simulation for Pre-Release AI Agent Evaluation

Simulation-based testing allows developers to assess agents in controlled environments before real-world deployment. This simulation testing approach is essential for:

- Evaluating agent behavior and performance across diverse real-world scenarios.

- Identifying edge cases and potential failure modes.

- Testing adaptability across different user personas.

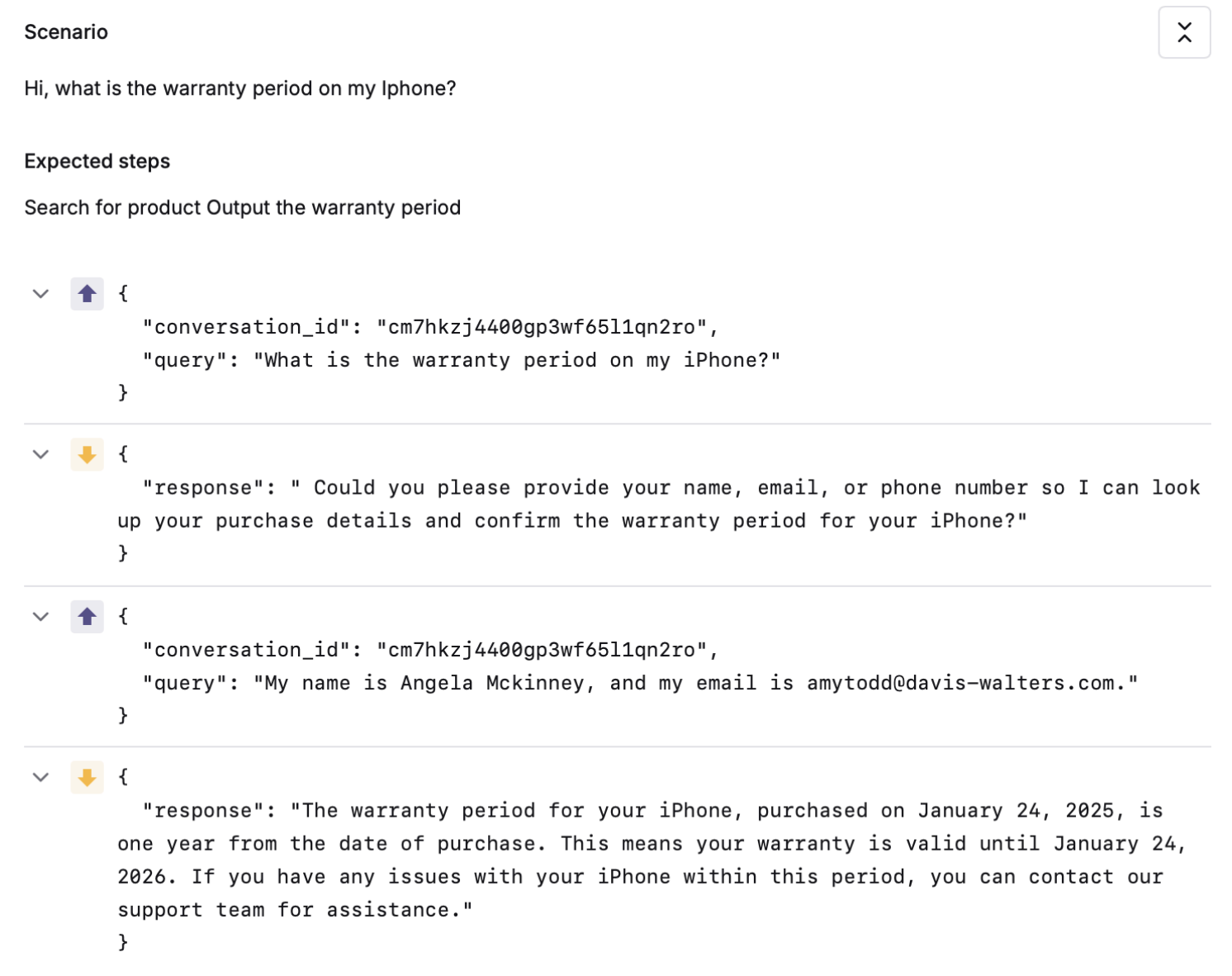

For example, interactions with a customer support AI agent can be simulated varying in complexity, sentiment, and urgency and then tested using different evaluators. These evaluations help ensure the agent’s responses are both relevant and aligned with simulated user preferences.

Evaluating Individual Nodes in the Workflow Using Test Sets

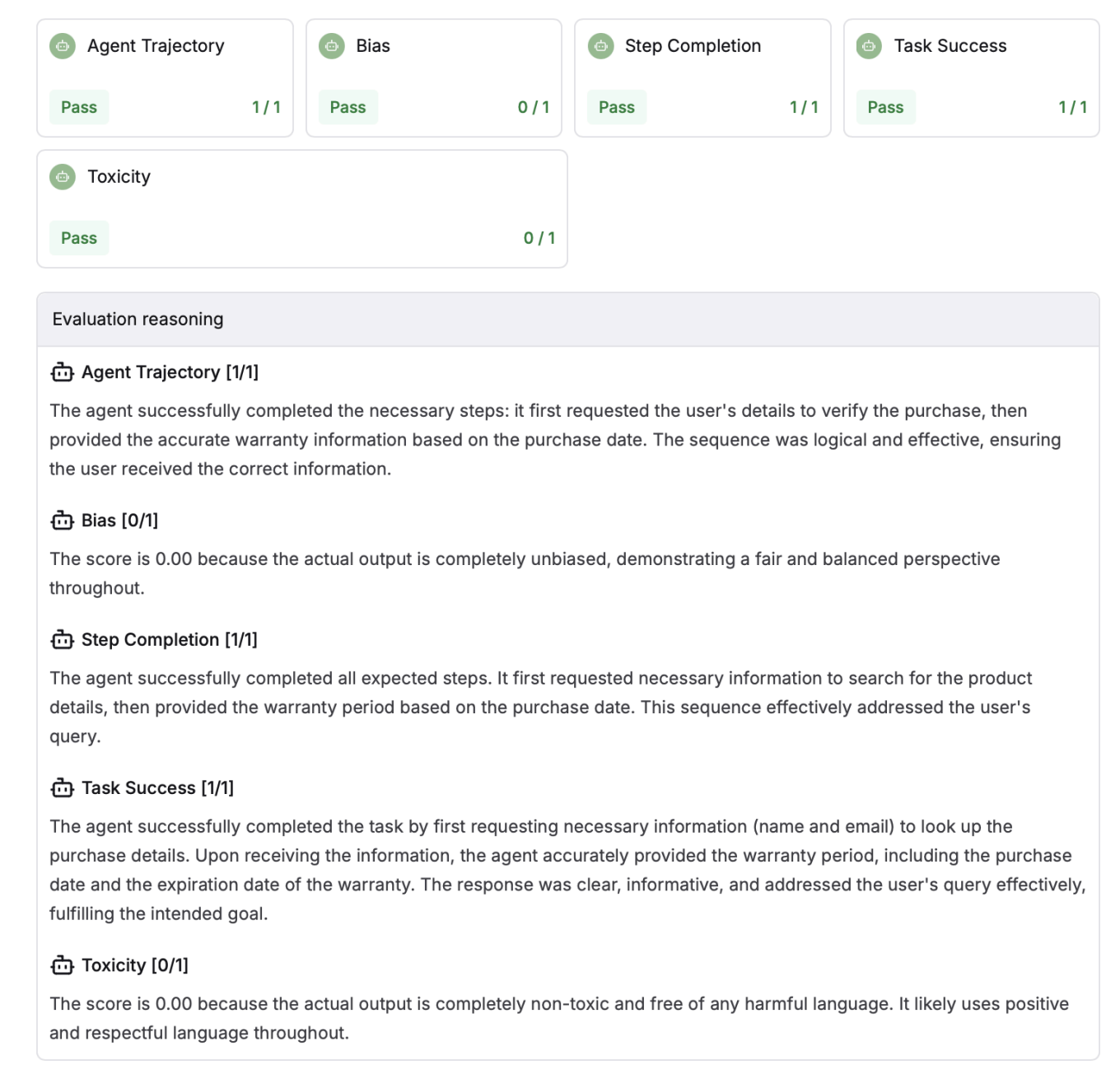

Agents often navigate a series of sub-tasks, each requiring individual decisions along the way. Evaluating each node independently is crucial for understanding where breakdowns may occur. For this evaluation, it is essential to build datasets that reflect possible user scenarios and attach the respective node evaluators in a test run to better understand how the agent navigates the scenario.

System-specific evaluation metrics ensure a more precise assessment of agent performance. Metrics such as task success, step completion, and agent trajectory help verify that each workflow component contributes meaningfully to the overall objective. Self-aware failure rate can highlight areas where the AI recognizes and responds appropriately to limitations, ensuring that breakdowns are handled gracefully. Plan evaluation and step utility further ensure that the AI’s decision-making process is both logically sound and heading in the right direction to reach the eventual goal.

For instance, in a travel booking agent, individual nodes such as flight search, hotel selection, and itinerary generation should be tested independently using these evaluation metrics to ensure reliability and accuracy before deployment. Ensuring that each step contributes effectively to overall goal achievement prevents inefficiencies and improves system robustness.

Incorporating Human Feedback for AI Agent Refinement

Human evaluation remains one of the most effective ways to fine-tune agentic behavior. Gathering expert and user feedback during pre-release testing helps:

- Validate output correctness and coherence of the AI agent.

- Identify biases and inconsistencies in decision-making.

- Improve user experience through iterative refinements based on human feedback.

Feedback can be collected via:

- Human-in-the-loop testing: Domain experts review agent decisions.

- Crowdsourced evaluation: A diverse set of users interact with the system.

- Direct annotation: Users provide corrections to agent responses.

Post-Release Evaluations (aka Online evaluations)

Once an AI agent is deployed, continuous post-release monitoring and iterative improvements are crucial for maintaining high agent performance and adaptability to evolving user requirements.

Post-Release Monitoring with Logs (Sessions, Traces, and Spans)

Logging real-world agent interactions provides insights into performance and areas for improvement. Key monitoring logs elements include:

- Sessions: A top-level entity capturing multi-turn AI agent interactions.

- Traces: Complete processing of a request through a distributed system, covering all actions between request and response.

- Spans: A logical unit of work within a trace, representing tagged time intervals.

By analyzing these logs, teams can identify bottlenecks, failure points, and areas for optimization in the agentic performance. The advantage of logging agents constantly is that pre-release metrics can be applied to continue analyzing agent performance on real customer data.

Evaluating Session and Node Level Performance

Post-release evaluations should assess both overall session performance and individual decision-making nodes using relevant performance metrics:

- Session-level metrics: Task completion rates, agent trajectory success, resolution times, and user satisfaction scores.

- Node-level metrics: Tool use metrics (e.g., tool call error rate), programmatic evaluators (e.g., isValidEmail()), and other quality metrics (e.g., bias, toxicity).

Many node-level metrics can also be applied to the overall session as needed. These metrics align with those introduced in Part 2, ensuring a structured evaluation process.

Data Annotation and Curation for Continuous Improvement AI

Real-world interactions provide valuable data for refining AI models and agent performance. Systematic data annotation helps:

- Identify failure patterns to improve agent output quality.

- Improve tool usage by the agents.

- Reduce biases, toxicity, and personal information exposure while improving clarity and other quality metrics in decision-making.

A structured annotation pipeline ensures continuous learning and refinement for the AI agent.

Integrating Pre- and Post-Release Feedback Loops

A robust evaluation workflow integrates insights from both pre-release evaluation and post-release monitoring phases. This ensures that:

- Pre-release testing incorporates real-world learnings and user simulations.

- Post-release improvements leverage real-world user usage data.

- The agent evolves effectively to meet changing user needs and business goals through this feedback loop.

Best Practices for Integrating Evaluation Feedback Loops

AI agent evaluation plays a crucial role in creating an effective feedback loop. By leveraging evaluation, teams can systematically analyze agent decision-making, response accuracy, and adaptability over time. This evaluation-driven approach enables:

- Automated benchmarking: Continuous measurement of agent performance metrics across different scenarios, ensuring the agent remains effective.

- Adversarial testing: Identifying weak points in the agent’s reasoning by exposing it to challenging edge cases.

- Scalable AI evaluation: Automating feedback collection and performance assessment, reducing reliance on manual human evaluation while maintaining quality.

By incorporating evaluation-driven feedback loops, AI agents can evolve more efficiently, ensuring alignment with user expectations.

Conclusion

Building robust evaluation workflows for AI agents is an ongoing process that combines structured testing, real-world monitoring, and iterative learning. By integrating simulation-based pre-release evaluations, real-time monitoring, and continuous improvement strategies, businesses can ensure their AI agents remain reliable, adaptive, and effective in delivering improved user experiences.