A Survey of Agent Evaluation Frameworks: Benchmarking the Benchmarks

In recent months, we've witnessed an explosion in the development of AI agents. Autonomous systems powered by large language models (LLMs) can perform complex tasks through reasoning, planning, and tool usage. However, as the field rapidly advances, a critical question emerges: how do we effectively measure and compare these agents' capabilities? The paper "Survey on Evaluation of LLM-based Agents" [1] provides a comprehensive examination of this evolving landscape, surveying agent evaluation frameworks for agents.

In this blog post, we'll dive deep into this paper's findings, compare the major evaluation frameworks, and share some insights on where agent evaluation should head next.

The State of Agent Evaluation

The fundamental challenge that we face today is that the rapid rise of the usage of agents has outpaced our ability to evaluate them systematically. This has led to fragmentation in evaluation methods, making it difficult to compare different agents and track progress in the field.

The paper helps to categorise existing benchmarks along several dimensions:

By Core Capability

This dimension assesses core functionalities, including:

- Planning and multi-step reasoning: Evaluating an agent's capacity to solve complex tasks by planning sequential steps [2][3][4][5][6][7][8].

- Function calling and tool use: Agents' abilities to utilize external tools and APIs [9][10][11].

- Self-reflection: Agents' capacity to critique and revise their own actions [12][13].

- Memory: Evaluating how effectively agents retain and apply previous information in new contexts [12][14].

By Evaluation Method

- Behavioral testing: Direct observation of agent actions in controlled environments

- Output evaluation: Assessment of final results

- Process evaluation: Analysis of the steps taken to reach a solution

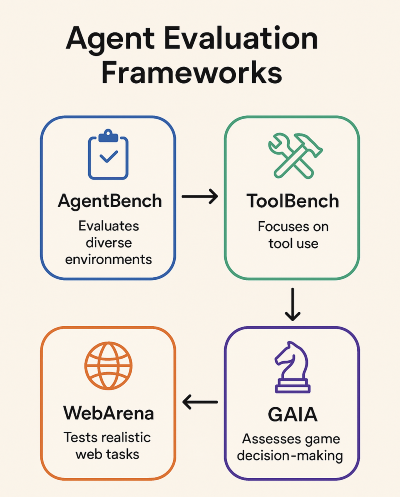

Agent Evaluation Frameworks

Let's examine some of the evaluation frameworks discussed in the paper:

AgentBench

AgentBench [15] emerged as one of the earliest comprehensive frameworks, evaluating agents across eight diverse environments, including web shopping, database operations, and coding.

Strengths:

- Covers a wide range of real-world tasks

- Established baseline for comparing commercial and open-source agents

Limitations:

- Primarily focuses on task completion rather than trajectory quality

- Limited reflection on agent reasoning capabilities

ToolBench

ToolBench [16] focuses specifically on tool use, providing a standardized API format for testing an agent's ability to select and use appropriate tools.

Strengths:

- Specialized evaluation of an increasingly important capability

- Standardized API approach improves reproducibility

Limitations:

- Narrower focus than some other frameworks

- May not reflect real-world tool interaction challenges

WebArena

WebArena [17] tests agents in realistic web environments, requiring them to complete tasks like online shopping and travel booking.

Strengths:

- High-quality, realistic environments for agent testing

- Directly relevant to commercial applications

Limitations:

- Complex setup requirements

- Highly sensitive to web interface changes

GAIA

GAIA [18] evaluates agents in game environments, testing decision-making in complex, dynamic settings.

Strengths:

- Tests performance on a wide variety of things over long interactions

- Evaluates strategic thinking and adaptation

Limitations:

- Gaming environments may not generalize to practical applications

- High computational requirements

Generalist Agent Benchmarks:

Generalist benchmarks assess the versatility of agents across varied tasks. A notable example highlighted in the paper is the Databricks Domain Intelligence Benchmark Suite (DIBS) [19], which evaluates agents across specialized industry domains and enterprise scenarios. Such benchmarks measure adaptability and cross-domain effectiveness.

Framework Comparisons: Tradeoff

The paper reveals several interesting patterns when comparing these frameworks:

- Task complexity vs. reproducibility trade-off: More complex evaluation environments tend to offer better real-world relevance but suffer from reproducibility issues.

- Metric inconsistency: Different frameworks emphasize different metrics, making cross-framework comparisons challenging.

- LLM-as-judge variations: Many frameworks rely on LLMs to evaluate performance, but implementation details vary significantly, affecting consistency.

- Open vs. closed environments: Open-world evaluations provide richer insights but introduce more variables that are difficult to control.

The authors identify a concerning trend: many benchmarks are designed primarily to showcase strengths rather than to provide a comprehensive evaluation. This leads to overfitting to specific benchmarks rather than driving generalizable improvements, which leads us to the question of how to improve the state of agent evaluators.

What is important to look at next?

Having analyzed the paper's findings, here are the key features that we believe are important to look at:

Process-Oriented Evaluation is Crucial

Most current frameworks focus heavily on outcomes, but the process by which agents reach those outcomes is equally important. Future evaluation frameworks should place greater emphasis on:

- Quality of reasoning chains

- Efficiency of tool selection

- Adaptability when initial approaches fail

Evaluating Agent Self-Improvement

A key aspect missing from many frameworks is measuring an agent's ability to learn from mistakes and improve over time. Future frameworks should incorporate:

- Learning curves across repeated tasks

- Adaptation to feedback

- Knowledge retention and transfer between tasks

Multi-Dimensional Scoring

Binary success/failure metrics are insufficient for complex agent systems. We need evaluation frameworks that score agents along multiple dimensions:

- Task completion rate

- Time/resource efficiency

- Safety compliance

- User satisfaction

To address these aspects, we are building out our agent eval suite on the Maxim platform with these functionalities.

Standardized Environments with Variable Difficulty

To enable meaningful comparisons, we need standardized environments that can be calibrated to different difficulty levels. This would help track progress more systematically and identify capability thresholds.

Human-AI Collaborative Evaluation

The paper touches on this briefly, but we believe evaluation frameworks need to place greater emphasis on how well agents collaborate with humans. This includes:

- Following instructions precisely

- Asking clarifying questions when appropriate

- Providing transparent reasoning

- Adapting to user feedback

Conclusion

The paper provides a valuable service by categorizing and analyzing the rapidly evolving landscape of agent evaluation. As the field continues to mature, we need to move beyond fragmented evaluation approaches toward more standardized, comprehensive frameworks.

The ideal evaluation framework would combine rigorous behavioral testing with process evaluation, provide standardized environments with variable difficulty settings, and measure both task performance and human alignment. Only then can we meaningfully track progress in agent development and ensure that advances are substantial rather than superficial.

We must recognize that how we evaluate agents will ultimately shape how they're developed. By creating more holistic evaluation frameworks, we can help steer agent development toward systems that are not just powerful but also reliable, transparent, and aligned with human needs.

References

[1] Yehudai, G., et al. (2025). Survey on Evaluation of LLM-based Agents. arXiv preprint arXiv:2503.16416.

[2] Ling, W., et al. (2017). Program Induction by Rationale Generation: Learning to Solve and Explain Algebraic Word Problems (AQUA-RAT).

[3] Yang, Z., et al. (2018). HotpotQA: A Dataset for Diverse, Explainable Multi-hop Question Answering.

[4] Clark, P., et al. (2018). Think you have Solved Question Answering? Try ARC, the AI2 Reasoning Challenge.

[5] Cobbe, K., et al. (2021). GSM8K: Grade School Math Word Problems.

[6] Hendrycks, D., et al. (2021). Measuring Mathematical Problem Solving with the MATH Dataset.

[7] Srivastava, S., et al. (2023). PlanBench: Benchmarking Planning Capabilities.

[8] Patel, S., et al. (2023). FlowBench: Evaluating Understanding of Sequential Processes.

[9] Liu, H., et al. (2022). ToolEmu: A Dataset for Evaluating Tool Usage.

[10] Parisi, G. et al. (2022). MINT: Mathematical Integration with External Tools.

[11] AutoPlanBench Team. (2023). AutoPlanBench: Evaluating Automated Planning Capabilities.

[12] MUSR Team. (2023). MUSR: Multi-Stage Reasoning and Reflection.

[13] Suzgun, M., et al. (2022). Big Bench Hard (BBH): Benchmark for Complex Reasoning Tasks.

[14] Khashabi, D., et al. (2018). MultiRC: A Dataset for Multi-Document Reading Comprehension.

[15] Liu, Xiao, et al. "Agentbench: Evaluating llms as agents." arXiv preprint arXiv:2308.03688 (2023).

[16] Qin, Yujia, et al. "Toolllm: Facilitating large language models to master 16000+ real-world apis." arXiv preprint arXiv:2307.16789 (2023).

[17] Zhou, S., Xu, F.F., Zhu, H., Zhou, X., Lo, R., Sridhar, A., Cheng, X., Ou, T., Bisk, Y., Fried, D. and Alon, U., 2023. Webarena: A realistic web environment for building autonomous agents. arXiv preprint arXiv:2307.13854.

[18] Mialon, G., Fourrier, C., Wolf, T., LeCun, Y. and Scialom, T., 2023, November. Gaia: a benchmark for general ai assistants. In The Twelfth International Conference on Learning Representations.

[19] Databricks Blog. (2024). Domain Intelligence Benchmark Suite (DIBS): Benchmarking for Generalist Agents.