Mindtickle’s Robust AI Productionizing Process powered by Maxim

About Mindtickle

Mindtickle is the market-leading revenue enablement platform that combines on-the-job learning and deal execution to drive behavior change and get more revenue per rep. Mindtickle is recognized as a market leader by top industry analysts and is ranked by G2 as the #1 sales onboarding and training product. This year, Mindtickle won a Bronze Stevie Award for Technology Excellence.

Mindtickle Spearheading AI-Powered Sales Efficiency

AI is transforming revenue teams' work, and Mindtickle is leading the charge with its AI-powered advancements, which empower sellers, revenue managers, and enablement teams to save time, engage buyers, and enhance team performance.

A few of the most-loved AI capabilities built by the Mindtickle team are:

- Interactive AI role-plays: AI-generated customers simulate live interactions, enabling sellers to practice pitches, handle objections, and tackle tough conversations in a safe, scalable environment—saving managers time.

- AI content creation: Mindtickle streamlines training content creation for enablement managers, empowering them to build diverse learning programs—from active learning to assessments—without heavy manual effort.

- Conversation intelligence: Automated call recording, transcription, and AI analysis allow sellers to focus on conversations while receiving actionable insights like summaries, coaching tips, and scoring. This improves follow-ups, deal closures, and coaching efficiency.

Additional features like semantic search, CMS, outreach tools, and the manager command center further enhance productivity.

Challenge

Mindtickle team has been laser-focused on creating the best experience for revenue teams. With the slew of helpful AI features they launch, the team has built rigorous engineering efforts to manually test and evaluate their systems. As the team continued to build more complex features, the complexity of testing these systems and ensuring AI quality became hard to manage and scale. Moreover, the collaboration on these features among different teams needed an upgrade. The manually intensive process involved:

- Curating manual datasets in spreadsheets, making management of datasets, continuous updation, and collaboration very hard

- Human review process that touched different stakeholders and resulted in longer feedback cycles

- No single pane of visibility for stakeholders into the different experiments teams were running and the findings thereof

These processes were critical to successfully launching AI features for Mindtickle. Still, the engineering teams were looking for solutions to make the process more efficient, scalable, and collaboration-focused.

Mindtickle and Maxim Driving AI Quality

Mindtickle’s central ML platform team partnered with Maxim to develop, test, and implement all the GenAI features the company is rapidly shipping. The most common workflow involves:

- Figuring a feature that needs to be launched or improved

- Curating multi-modal datasets focused on the specific feature

- Choosing a set of evaluation criteria focused on the use case directly from the evaluation store or creating custom metrics

- Attaching these evaluators and datasets to prompts, prompt chains, or more complex AI workflows for evaluations. This could involve human evaluations as well.

- Generate quality reports with detailed comparisons between different prompts or workflows

- Analyzing the outcomes to make decisions about the product or feature. This also involves sharing reports/findings seamlessly with the required stakeholders

- Repeat

One of the highlights for the Mindtickle team was when GPT 3.5 was getting deprecated, and the team had to swap out GPT 3.5 across all product features with the right models on a very short timeline. Leveraging Maxim’s evaluation platform, the team could seamlessly transition away from GPT 3.5 with a metric-driven and scalable approach. This shift involved evaluating existing prompts and datasets, conducting iterations to identify the best replacement models, and ensuring each use case was tailored for optimal performance.

"Maxim’s ability to help iterate with complex AI workflows and compare results has been incredibly useful. It has allowed us to automate testing and refine our reporting, helping us improve AI quality and save time."

- Ajay Dubey, Engineering Lead, Mindtickle

Maxim’s data curation and evaluation framework enabled a data-driven approach to improve and refine system performance. The workflow incorporated rigorous pre-release testing, detailed reporting, and iterative adjustments to prompts and configurations, ensuring stability and avoiding any performance and quality regressions.

Beyond engineering and software teams, other users, including QA teams, have started incorporating these capabilities into their workflows. For instance, QA teams now use Maxim’s solutions as part of their regular testing cycles, while staff engineers leverage them to generate automated reports, compare datasets, and refine prompts. These practices have yielded measurable benefits and streamlined processes across the board.

Maxim has also been instrumental in audit processes, such as vulnerability assessments and penetration testing. Maxim helped identify and address specific breaches in one instance, ensuring compliance and bolstering the organization’s security posture. This use case underscores the platform’s versatility and ability to effectively address critical enterprise challenges.

"During a vulnerability assessment, Maxim helped us identify potential issues we might have missed. Using Maxim, we were able to pinpoint critical changes needed to address security breaches and ensure our systems met audit requirements. It was instrumental in ensuring our product's robustness."

- Ajay Dubey, Engineering Lead, Mindtickle

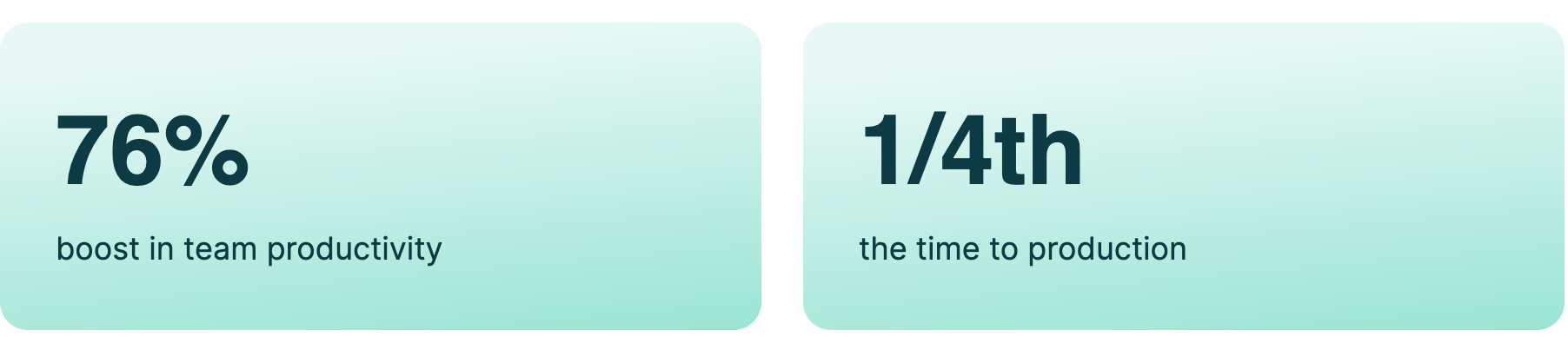

All of this led to 76% improved productivity for the Mindtickle team, enabling them to have an average time to production reduced to 5 days from 21 days. From an engineering process perspective, this also resulted in much better collaboration across different teams and creating a playbook that teams could learn from internally and externally.

Conclusion

Mindtickle’s approach to AI development relies on building the right foundation early so that the team continues to ship high-quality AI capabilities to revenue teams globally. Their framework for AI development and engineering process maturity are valuable insight into how companies should evolve their focus as they mature in adopting GenAI. The Mindtickle team emphasized that the path to maturity is not linear but layered, with each step building upon the foundation of the previous one. They also underscored the pivotal role of evaluation early in the AI adoption lifecycle and believe that Maxim’s approach is perfectly suited for high-stakes applications where quality assurance is paramount.

Integrating robust evaluation mechanisms is foundational to our AI strategy. It enables us to shift from reactive troubleshooting to proactive quality management, which is which is critical as AI workloads and user interactions grow more complex.

- Ajay Dubey, Engineering Lead, Mindtickle

For Maxim AI, this philosophy resonates deeply as they continue to enable global teams building complex multi-agentic architectures to improve and ship their applications reliably. We’d love to chat more if you’re looking to build robust evaluation processes for your AI development.

Learn more about Maxim here: https://www.getmaxim.ai/

Learn more about Mindtickle here: https://www.mindtickle.com/