LLM hallucination detection

Introduction

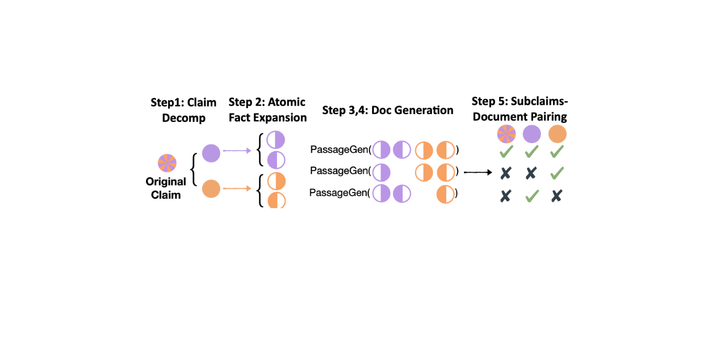

Large Language Models (LLMs) such as GPT-4 and Llama2 generate human-like text, which has enabled a variety of applications. However, alongside this fluency, a major challenge remains hallucinations—situations where the model generates factually incorrect or unverifiable information. Hallucinations in LLMs are not one-dimensional but manifest in various forms,