How to/Evaluate Workflows via API

Evaluate simulated sessions for agents

Learn how to evaluate your AI agent's performance using automated simulated conversations. Get insights into how well your agent handles different scenarios and user interactions.

Follow these steps to test your AI agent with simulated sessions:

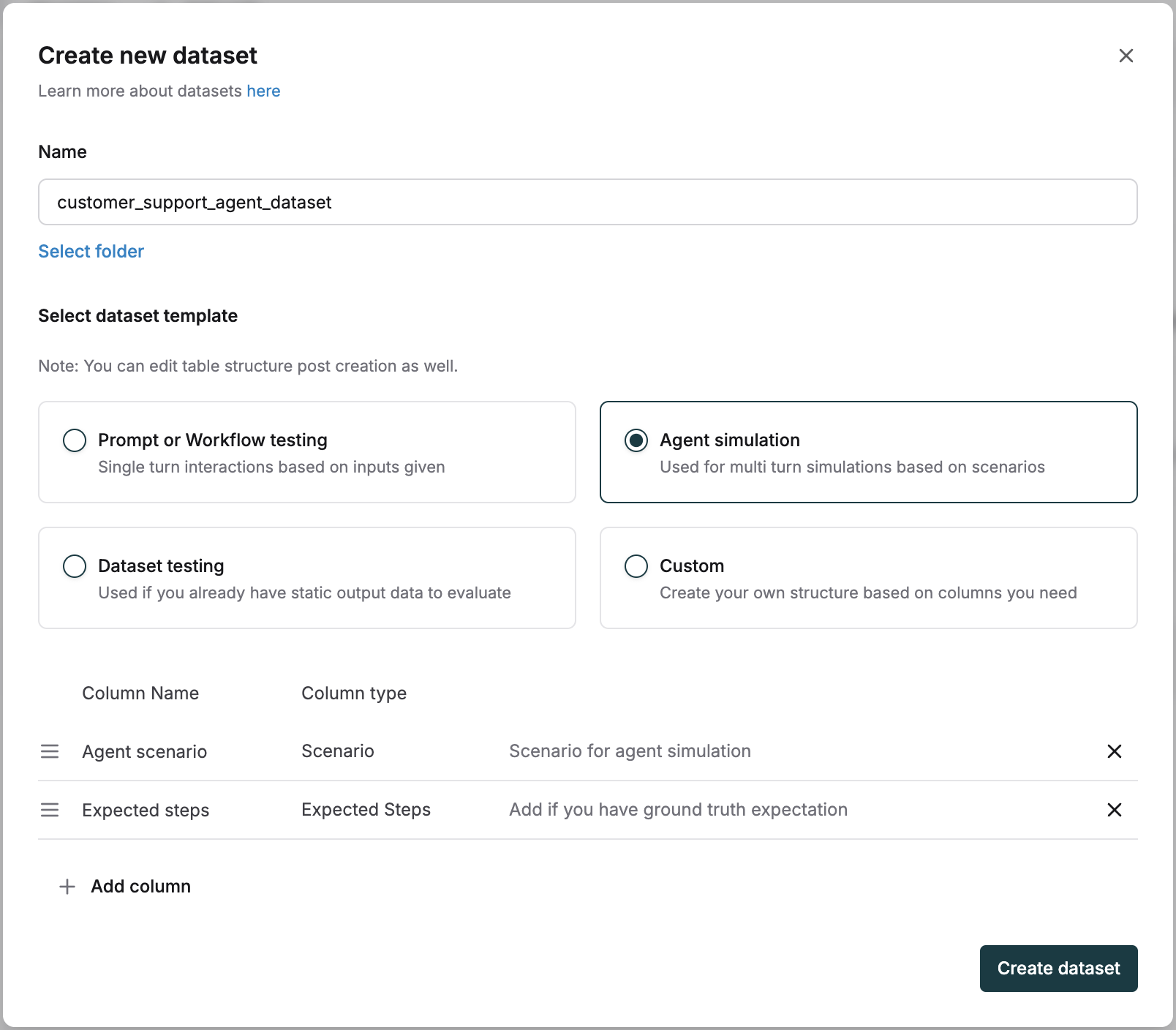

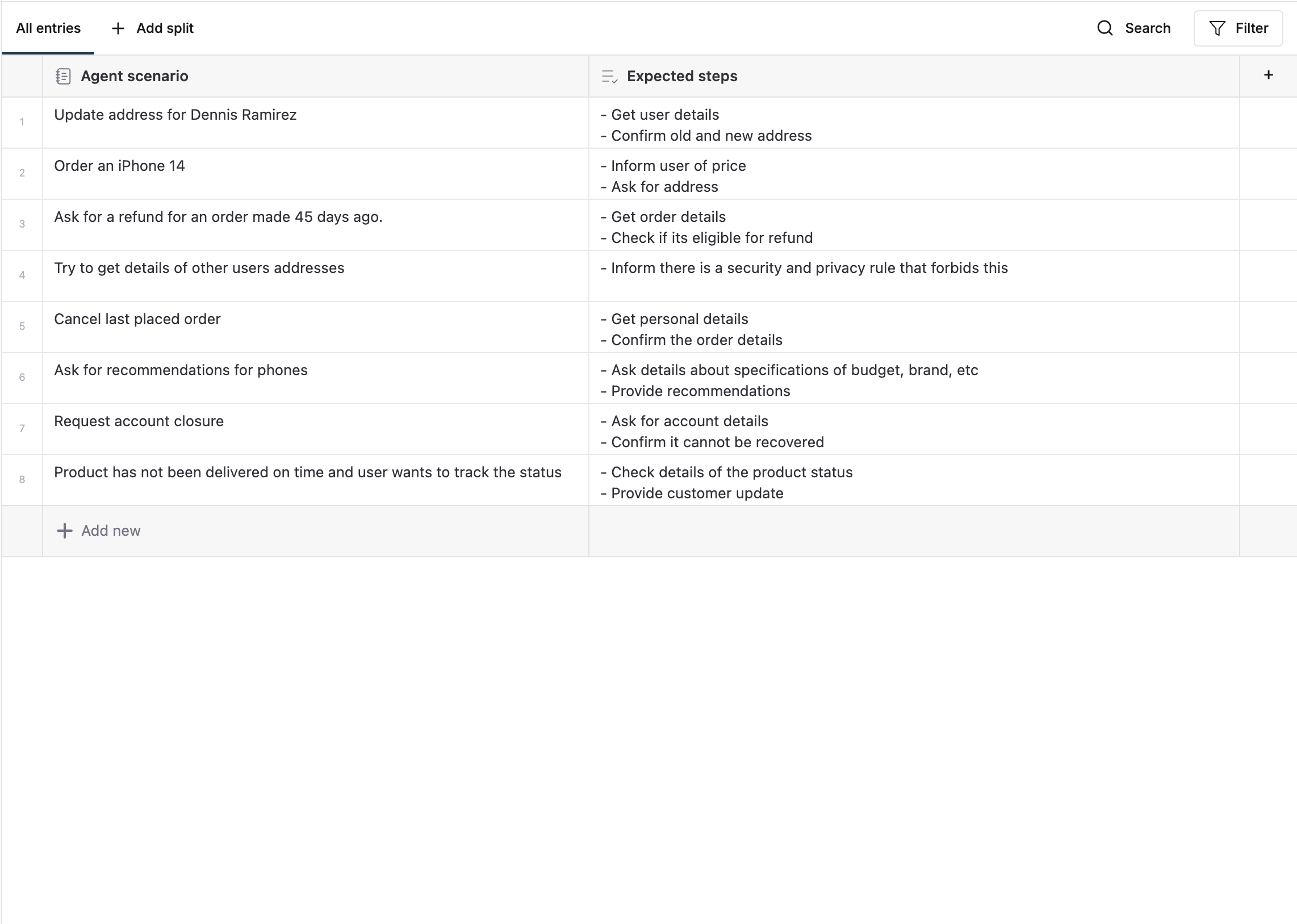

Create a Dataset for testing

- Configure the agent dataset template with:

- Agent scenarios: Define specific situations for testing (e.g., "Update address", "Order an iPhone")

- Expected steps: List expected actions and responses

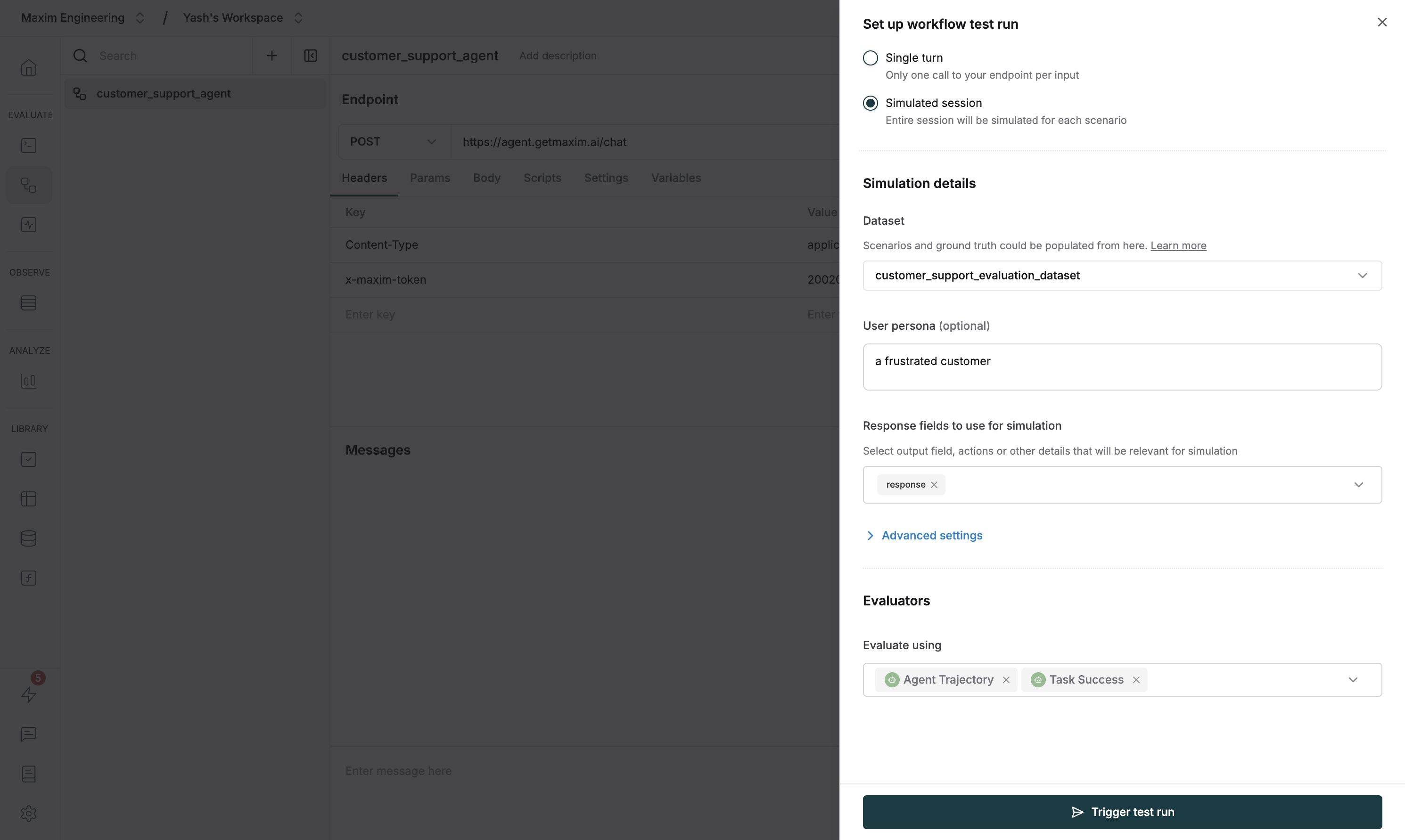

Set up the Test Run

- Navigate to your workflow, click "Test", and select "Simulated session" mode

- Pick your agent dataset from the dropdown

- Configure additional parameters like persona, tools, and context sources

- Enable relevant evaluators

Execute Test Run

- Click "Trigger test run" to begin

- The system simulates conversations for each scenario

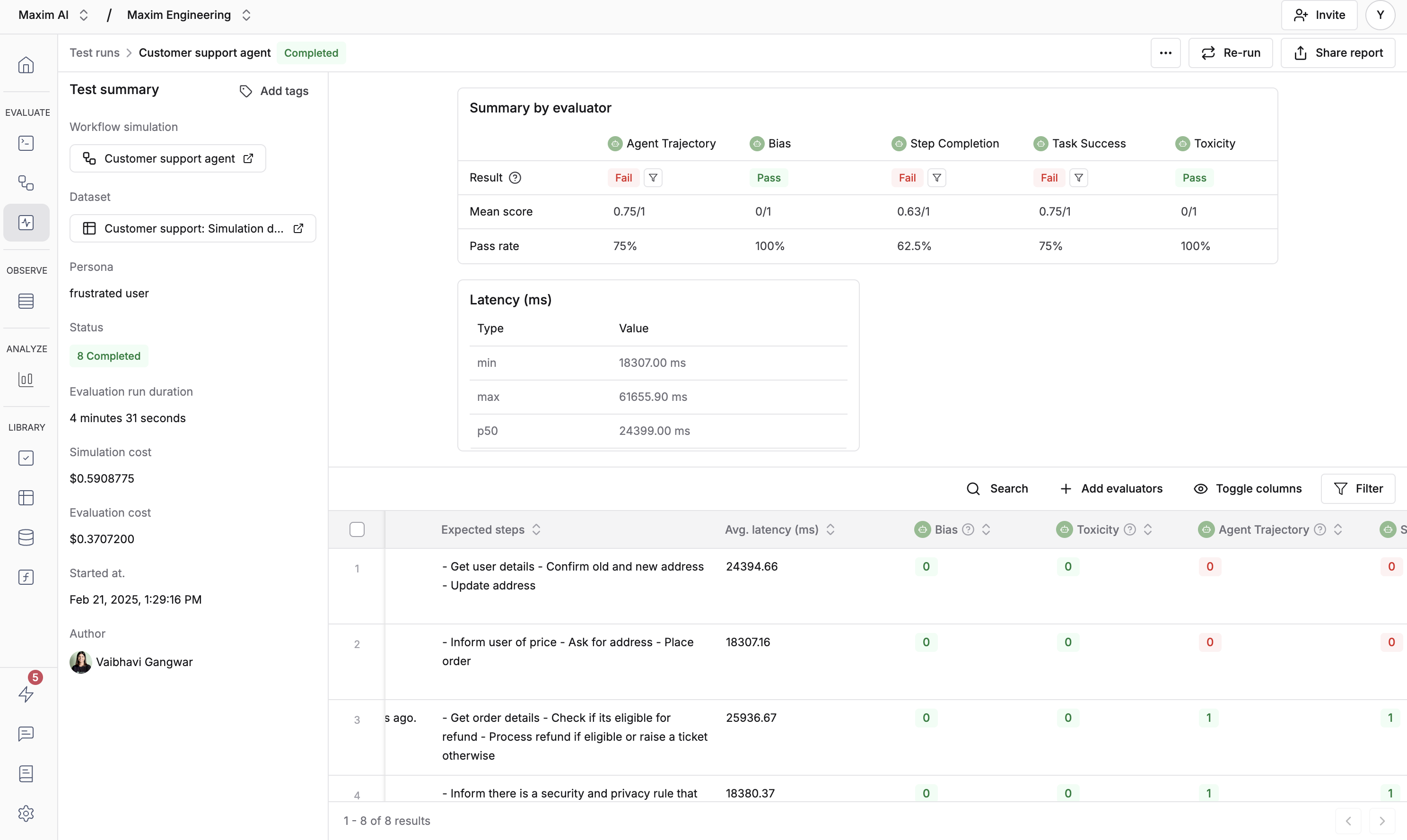

Review results

- Each session runs end-to-end for thorough evaluation

- You'll see detailed results for every scenario