The following builds upon Evaluate Prompts -> Automate prompt evaluation via CI/CD. Please refer to it if you haven't already.

Automate workflow evaluation via CI/CD

Trigger test runs in CI/CD pipelines to evaluate workflows automatically.

Pre-requisites

Apart from the pre-requisites mentioned in Evaluate Prompts -> Automate prompt evaluation via CI/CD, you also need A workflow to test upon

Pre-requisites mentioned earlier that you need:

- An API key from Maxim

- A dataset to test against

- Evaluators to evaluate the workflow against the dataset

- and A workflow to test upon

Test runs via CLI

Apart from what was introduced earlier, you can use the -w flag in place of -p to specify the workflow to test upon.

Installation

Use the following command template to install the CLI tool (if you are using Windows, please refer to the Windows example as well):

For more please refer to Evaluate Prompts -> Automate prompt evaluation via CI/CD -> Test runs via CLI

Triggering a test run

Use this template to trigger a test run:

Here are the arguments/flags that you can pass to the CLI to configure your test run

| Argument / Flag | Description |

|---|---|

| -w | Workflow ID or IDs; in case you send multiple IDs (comma separated), it will create a comparison run. |

| -d | Dataset ID |

| -e | Comma separated evaluator names Ex. bias,clarity |

| --json | (optional) Output the result in JSON format |

Test runs via GitHub Action

Apart from what was introduced earlier, you can use the workflow_id "with parameter" in place of prompt_version_id to specify the workflow to test upon.

Quick Start

In order to add the GitHub Action to your workflow, you can start by adding a step that uses maximhq/actions/test-runs@v1 as follows:

Please ensure that you have the following setup:

- in GitHub action secrets

- MAXIM_API_KEY

- in GitHub action variables

- WORKSPACE_ID

- DATASET_ID

- WORKFLOW_ID

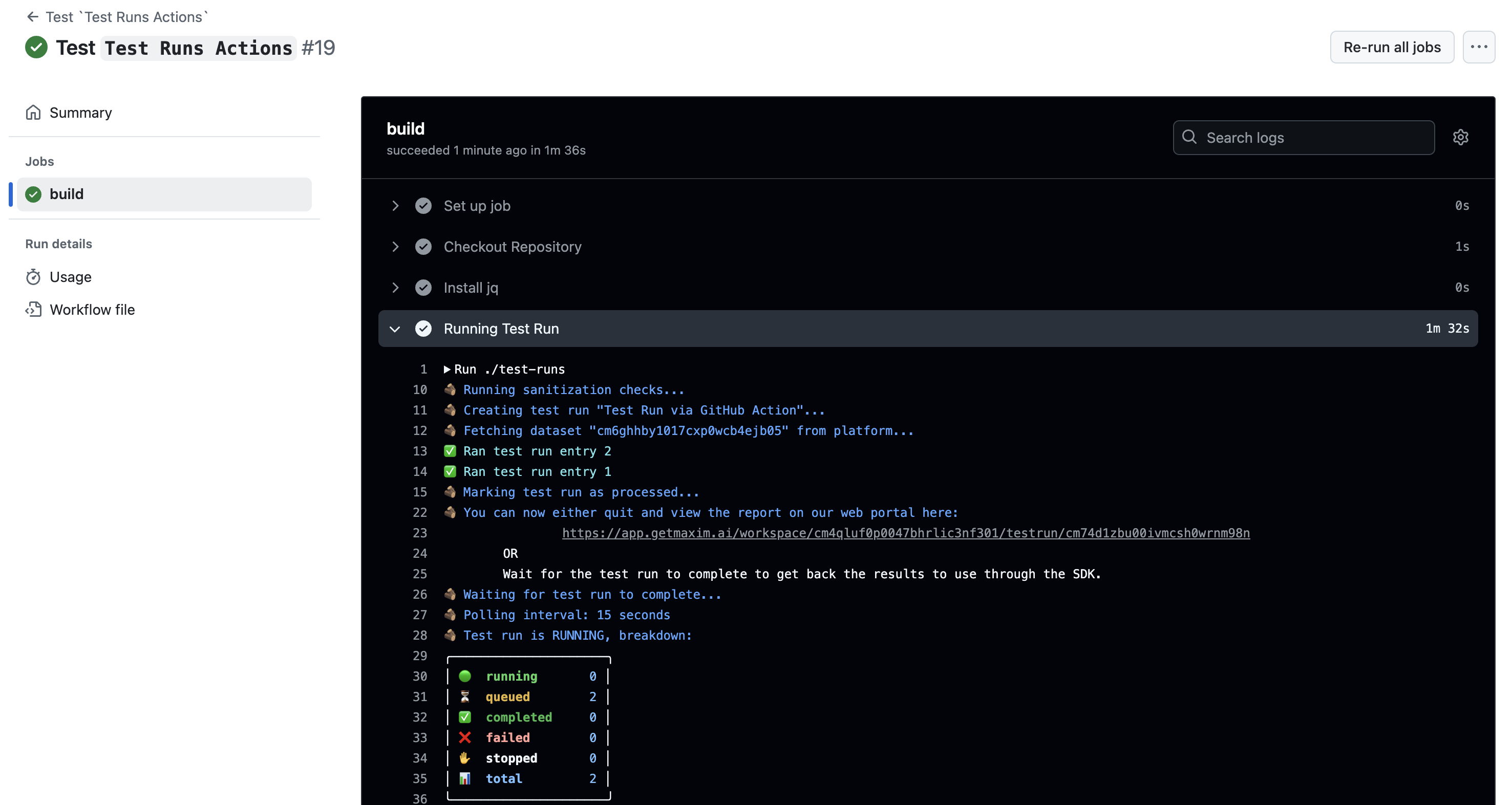

This will trigger a test run on the platform and wait for it to complete before proceeding. The progress of the test run will be displayed in the Running Test Run section of the GitHub Action's logs as displayed below:

Inputs

The following are the inputs that can be used to configure the GitHub Action:

| Name | Description | Required |

|---|---|---|

api_key | Maxim API key | Yes |

workspace_id | Workspace ID to run the test run in | Yes |

test_run_name | Name of the test run | Yes |

dataset_id | Dataset ID for the test run | Yes |

workflow_id | Workflow ID to run for the test run (do not use with prompt_version_id) | Yes (No if prompt_version_id is provided) |

prompt_version_id | Prompt version ID to run for the test run (discussed in Evaluate Prompts -> Automate prompt evaluation via CI/CD, do not use with workflow_id) | Yes (No if workflow_id is provided) |

context_to_evaluate | Variable name to evaluate; could be any variable used in the workflow / prompt or a column name | No |

evaluators | Comma separated list of evaluator names | No |

human_evaluation_emails | Comma separated list of emails to send human evaluations to | No (required in case there is a human evaluator in evaluators) |

human_evaluation_instructions | Overall instructions for human evaluators | No |

concurrency | Maximum number of concurrent test run entries running | No (defaults to 10) |

timeout_in_minutes | Fail if test run overall takes longer than this many minutes | No (defaults to 15 minutes) |

Outputs

The outputs that are provided by the GitHub Action in case it doesn't fail are:

| Name | Description |

|---|---|

test_run_result | Result of the test run |

test_run_report_url | URL of the test run report |

test_run_failed_indices | Indices of failed test run entries |

Evaluating Prompts

Please refer to Evaluate Prompts -> Automate prompt evaluation via CI/CD