Create and manage Prompt versions

As teams build their AI applications, a big part of experimentation is iterating on the prompt structure. In order to collaborate effectively and organize your changes clearly, Maxim allows prompt versioning and comparison runs across versions.

Create prompt versions

A prompt version is a set of messages and configurations that is published to mark a particular state of the prompt. Versions are used to run tests, compare results and make deployments.

If a prompt has changes that are not published, a badge showing unpublished changes will show near its name in the header.

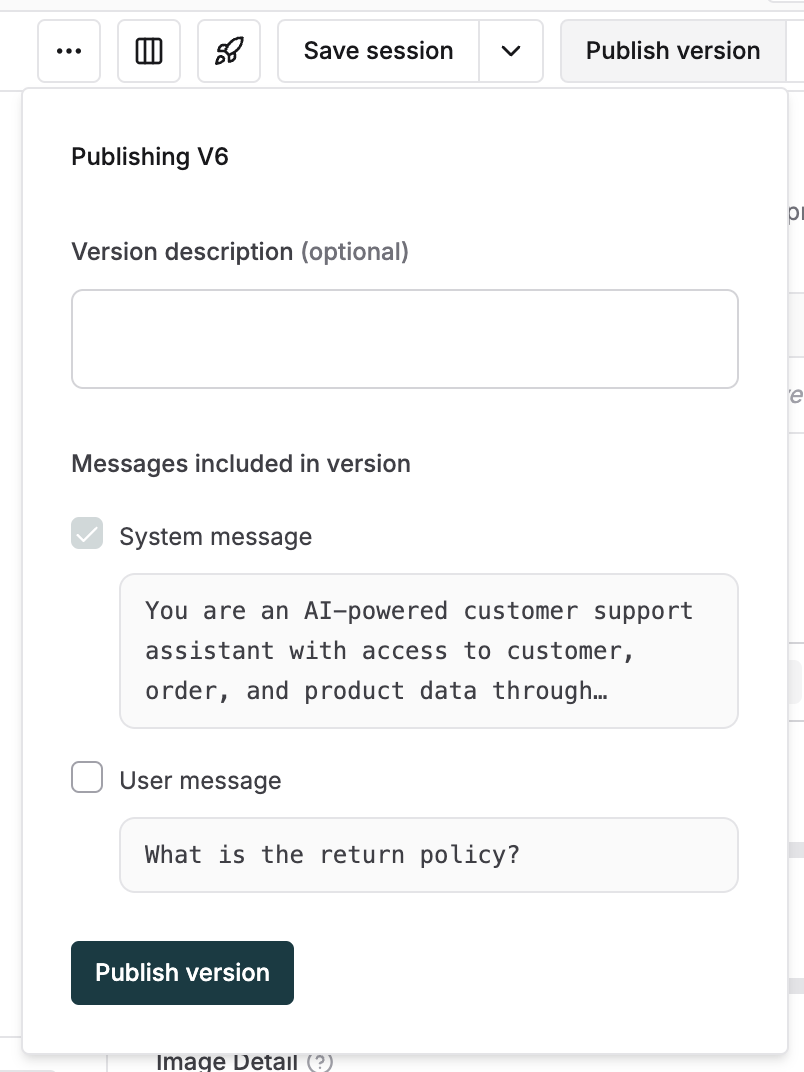

To publish a version, click on the publish version button in the header and select which messages you want to add to this version. Optionally add a description for easy reference of other team members.

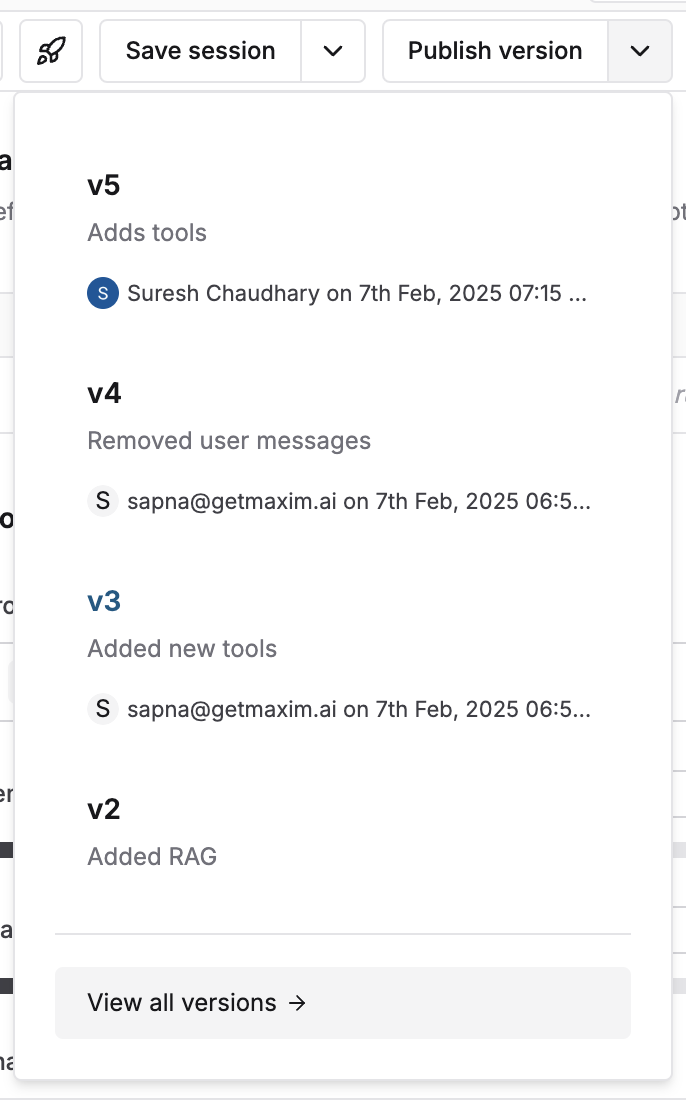

View recent versions by clicking on the arrow adjoining the publish version button and view the complete list using the button at the bottom of this list. Each version includes details about publisher and date of publishing for easy reference. Open any version by clicking on it.

Next steps

Measure the quality of your RAG pipeline

Retrieval quality directly impacts the quality of output from your AI application. While testing prompts, Maxim allows you to connect your RAG pipeline via a simple API endpoint and evaluates the retrieved context for every run. Context specific evaluators for precision, recall and relevance make it easy to see where retrieval quality is low.

Compare Prompt versions

Track changes between different Prompt versions to understand what led to improvements or drops in quality.